The First Battle of Bull Run in the American Civil War (1861), the Battle of Tannenberg in World War I (1914), the Battle of Midway in the Pacific War (1942), the Inchon Landing on the Korean peninsula (1950) or the Six-Day War around Israel (1967) – these are a few of the numerous examples where undoubted, heard and understood intelligence played a decisive role in violent conflicts (Elder, 2006). The five instances and a vast number of intelligence failures underline the importance of intelligence receptivity in tactical, operational and strategic planning. This paper outlines three crucial points for successful intelligence reception, provides case-study examples of their application[1] and formulates recommendation for enhanced receptivity.

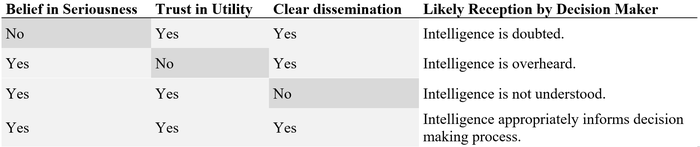

Intelligence scholar Erik J. Dahl outlines in his 2013 paper that the belief in the seriousness of a specific issue and the overall trust in the utility of intelligence are necessary to create appropriate receptivity with decision makers. The overall trust belongs to the domain of intelligence recipients as it is rooted in the decision maker’s normative system, hampered by biases or immature cognitive concepts and typically changes over a longer time period; the belief in a specific matter depends on the availability and certainty of evidence for a claim – the domain of intelligence providers. With both factors falling into either the sender or receiver domain (Lacasa-Gomar, 1970), the article adds a third factor that accounts for deficiencies in the communication between intelligence provider and recipient: the problem of dissemination.

Belief in the Seriousness of the Issue – Uncertainty & Analysis Problems

Uncertainty problems: At the heart of intelligence collection and analysis lies uncertainty of the known. If assessments, intentions or plans of an adversary were known and certain – if no ulterior motives, secret strategies or hidden resources could be suspected, intelligence would superfluous. In reality, not only the unavailability of information but also the inner-organizational deficiencies undermine the quality and certainty of intelligence. Even if decision makers have trust in the utility of intelligence in general, the case-specific question of “How much evidence is enough?” can hamper the intelligence receptivity as seen in the case of the Arab-Israeli Yom Kippur War in 1973 where the intelligence-inherent ambiguity hindered an effective Israeli response (Kahana, 2002). In the case of the Chinese intervention in Korea (1950), the overconfidence (and the lack of communication) on the certainty of intelligence forced UN and South Korea units to retreat as they could not withstand the mal-assessed Chinese intervention on October 19 (Cohen, 1990).

A natural reaction to uncertainty and ambiguity is the request for more intelligence (collection). While additional information from potentially different intelligence sources can help to reduce ambiguity and enhance situational awareness, the call for collection is no panacea: (1) More information can make a situation less transparent and increase the preexisting ambiguity level. (2) An imprudent call for more information might deflect from an adequate warning vs threat assessment and wastes valuable time to act. (3) Better collection shows no certainty-enhancing effect if the following intelligence analysis is poor.

The ambiguity of information also aggravates the “cry wolf” problem of intelligence warning. As warning might prevent the mentioned scenario from occurring, warnings can easily be perceived as “always wrong” despite their crucial role in improving a conflict’s outcome. The so-called “warning tiredness” (Gentry & Gordon, 2018) can worsen a decision maker’s skepticism towards uncertain intelligence, lead to consumers ignoring future warnings and ultimately undermines one’s trust in the utility of intelligence.

Analysis Problems: A key feature for the provision of timely and as-certain-as-possible intelligence is its effective analysis. Various examples show the organizational deficiencies that undermine the belief in the seriousness of a topic or the overall trust in intelligence. In the case of the German invasion of Norway in Spring 1940, the British successfully broke the German Airforce “Enigma”, a highly sophisticated encryption method. Nonetheless, they were not able to create relevant insight as there were too few military experts to oversee the vast amount of suddenly available information and assess the relevant intelligence (Claasen, 2004). The lack of sufficiently qualified US and Israeli intelligence officers also undermined adequate assessment of a potential USSR intervention in the Israeli-Egyptian war in 1969-70 as the officers lacked Soviet-specific background and were not able to interpret Soviet intentions from gathered intelligence (Adamsky, 2005). In addition to poorly trained staff, a mal-adjusted organizational setup might produce insufficient analysis. The German military intelligence (Abwehr) in the 1940 air war over England was so fragmented that the individual units did not share information and instead competed against one another in collection and analysis – an organizational phenomenon known as “turf war” (Cox, 1990). Similar organizational mal-adjustments contributed to the U.S. failure to foresee a Soviet intervention in the Israeli-Egyptian war in 1969-1970. U.S. and Israeli intelligence agencies shared highly uncertain findings but each relied on the other to substantiate them. Trusting the organization and not reviewing its information led to a mutual reinforcement of flawed perceptions (Adamsky, 2005). Another major problem to providing as-certain-as-possible intelligence is known as “stove piping”. Identified as a major deficiency of British intelligence during the Northern Ireland conflict (approx. 1970-1995), “stove piping” refers to managing different types of intelligence (here: security and political intelligence) so separately that they appeared independent even though they belong together. The mal-adjustment leads to an incomplete intelligence analysis (Craig, 2018). Some organizational deficiencies appear rather trivial but are nonetheless problematic: During the 2nd Lebanon war, Israeli intelligence was too highly classified to reach decision makers on lower ranking levels and prevented them to substantiate their decisions with improved intel (Bar-Joseph, 2007). Similarly, during Operation Desert Storm/Shield 1993, only four out of twelve intelligence systems were interoperable. This meant that “[t]he Navy had their own systems, which could not interface with the Army's systems, which could not interface with the Marines', which could not…” (Oversight and Investigations Subcomittee, 1993, p. 14) and led to an unwilling reduction of intelligence corroboration.

Trust in the Utility of Intelligence – Concepts & Biases

Even with high certainty on an issue, intelligence is useless if decision makers do not trust intelligence in general. Lacking trust in its utility is rooted in a decision maker’s psychological deflection mechanisms and the two-faced “Problem of Concept”[2] and heavily undermines the receptivity of intelligence.

Ideological Concept. The problem of ideological concepts was at its heyday during the first half of the 20th century: With a strongly ethnocentric argumentation, the Japanese claimed superiority over the U.S. in WWII as they focused mainly on the “superior virtue” of their soldiers – a one-sided estimate that obstructed the unbiased, object assessment of the adversary’s constitution (Coox, 1990). An equally flawed perception could be observed on the other side of the Pacific War: Being convinced of their technological superiority, the U.S. Navy discredited its own intelligence on, for example, the capabilities of the Japanese Yamamoto-class battleships or its night fighting abilities (Mahnken, 1996). In a last example for ideology-driven concepts, the British Royal Navy structurally underestimated intelligence on a potential German invasion of Norway as it complacently relied on its naval superiority and assumed that Nazi-Germany had no interest in invading Norway (Claasen, 2004).

Over-rationalization Concept. In addition to the ideological concept, a second, more contemporary form of intelligence-obstructing mental models exists: the over-rationalization concept. Over-rationalization of intelligence attacks the trust in intelligence as all insights “need to make sense”. This is hardly ever the case: Even though organizations tend to be more rational than individuals (Kugler, Kausel, & Kocher, 2012) and it is reasonable to presume some degree of rationality in the adversaries’ behavior, not all actions are solely motivated by reason – but by factors such as politics, organizational dynamics or emotion. Also, intelligence organizations typically see only a part of the picture and might miss relevant pieces to the rationality puzzle. Assuming to always see, understand and possibly wait for rational decision making on the adversary’s side can be highly counter-productive as shown in two examples in the Middle East: At the verge of the Yom Kippur War 1973, Israel falsely assessed the possibility of an Egyptian attack as they relied on an over-rationalized concept instead of intelligence evidence, assuming that Egypt would not enter the war until were able to reach main Israeli airfields with their long-range Sukhoi bombers and Scud missiles – an over-rationalized misjudgment as history showed (Kahana, 2002). A little earlier, the U.S. was – despite opposite indication – convinced that the USSR would not enter a proxy war in the Middle East and were surprised by the Soviet interference in the Israeli-Egyptian war 1969-70 (Adamsky, 2005). In its extreme form, decision makers with an over-rationalization concept can even undermine particularly clear cases with very strong intelligence. Genuinely mistrusting any intelligence, the decision makers apply the irrefutable (and therefore bad) argument that the findings cannot be trust because they are so unambiguous and an adversary would “try harder” to conceal its intentions or material.

Cognitive biases: The lack of trust, as well as the ideology and over-rationalization concepts are spurred by psychological deflection mechanisms for undesired information. These biases of the decision maker contain: (1) Requiring a higher validation threshold for undesired over desired information; (2) A more frequent challenge of the credibility of the source if the information is undesired; (3) The request for more information, silently hoping for the contradiction of undesired information; (4) A focus on the remaining ambiguity in the information instead of a rational warning versus threat-assessment (Betts, 1980). A well-known example is – again – the Israeli intelligence receptivity in the Yom Kippur War: Given the highly fragile situations, the Israeli minister of defense clung to the remaining uncertainty to not conduct a pre-emptive Israeli strike against Egypt despite affirmative indication. Together with the concept-driven mis-assessment of its adversary’s plans, Israel was surprise by the Egyptian attack that led to their later (strategic) victory (Kahana, 2002).

Recipient-specific Communication – the Dissemination Problem

Even if a decision maker generally trusts intelligence and rather unambiguous information is available, the intelligence organizations need to assure that their message is understood. In the case of the case of Operation Desert Storm/Shield, conveying the message suffered from a non-recipient-specific communication. The report of the Oversight and Investigations Subcommittee suggests “displaying intelligence data in digestible form telling commanders, for example, that a bridge is unusable by military vehicles rather than communicating an engineer's calculation that the bridge is 52 percent destroyed.” (Oversight and Investigations Subcomittee, 1993, p. 3) A second example of broken communication is particularly prevalent in autocratic regimes, where intelligence officers fear for personal reprisal for communicating “bad news”. For example: The German Luftwaffe (air force) intelligence manipulated or did not report negative results in fear for inimical reactions and slowed promotion. This led to a substantive underestimation of the capabilities of the British Royal Airforce in World War II (Cox, 1990). The frequently overlooked dissemination problem has the least negative effects of all pitfalls in the triad if neglected but can positively reinforce the role of intelligence in decision making as it outlines the level of ambiguity of intelligence, adequately transports the assessment of the seriousness of an issue at hand and substantiates the overall trust in the utility of intelligence.

Recommendations for Improved Intelligence Receptivity

Intelligence receptivity is a decisive factor for intelligence organizations to appropriately inform the decision-making process. Improving receptivity is therefore closely linked to improving intelligence processes overall.

Strategic vs tactical intelligence. Agencies should stringently differentiate between strategic and tactical intelligence and inform decision makers about the respective level and its deficiencies: While strategic intelligence is often perceived as too abstract, tactical intelligence is hard to acquire and substantiate (Dahl, 2013). To avoid too abstract warning, as seen in the Pearl Harbor case, decision makers need to be aware of the respective level of information. Intelligence analysts can use the differentiation into periodic strategic intelligence reports to steer intelligence priorities and ad-hoc tactical intelligence reports for timely warnings and alerts on opportunities. In addition to sensitizing decision makers for the level of intelligence, analysts must also share the “cry wolf” problem with decision makers and sensitize for the potential misconceptions from successful deterring warning. Lastly, recipient-specific communication helps to convey a message: A tactical briefing to a Special Operations Forces team must look different to a strategic intelligence briefing to a policy maker – to enhance intelligence receptivity it is therefore key to speak the language of those that receive the information.

Warning of warning. A particular challenge to decision makers is their receptivity towards intelligence warning. Inadequate receptivity in this field led to major tactical warning failures such as Pearl Harbor (ignored indication like the irregular change of call signs, see Saito, 2020) or the September 11 attacks (repeated warning of the Federal Aviation Association of instrumentalizing airplanes for terrorist attack did not lead to appropriate counter-measures, see Gentry, 2010). The extensive literature on the warning problem (for example, Betts, 1980 or Gentry & Gordon, 2018) recommends organizationally independent warning units, special recruitment and training of warning officers, which then interact with intelligence analysts in a hybrid model, and to also emphasize opportunities (not only warnings). These recommendations will not only help to improve intelligence warning but prevent the detrimental effects of a “warning tiredness” on the trust in intelligence.

Uncertainty & Bias Awareness. Decision makers are forced to decide in highly dynamic situations with changing level of uncertainty of the available information. Adequately informing about the level of certainty is key to preventing an over-reliance or underestimation of information. Analog to the statistical terms of confidence interval, a simple approach is to generate best, medium and worst-case scenarios dependent on the intelligence ambiguity level. Analogously, decision makers and intelligence analysts must pay great attention to the problem of cognitive biases in collection, analysis and decision making. Unnoticed assumptions on the adversaries’ intentions, logic and conduct can be countered by addressing the limitation of one’s own assessment and working in “counter-bias settings” such as inner-organizationally competing teams, a pre-assigned, in-team devil’s advocate or rationalization-sensitive war gaming.

This paper was submitted as final paper to the course "Intelligence & War" at Columbia

University's School of International and Public Administration (SIPA), lectured by Prof John A. Gentry, former CIA and DIA officer and now Adjunct Associate Professor at SIPA.

[1] Discussing failures of intelligence reception in the following, it is important to outline that these events are rarely caused by a single factor but a complex interplay of many. The decisiveness of one factor is difficult to isolated but elements of reception failures are believed to have played an important role in all examples provided.

[2] “Concept” is the translation of the Hebrew Ha-konceptzia, the insufficient guidelines of Israeli intelligence in the assessment of war entry factors for Egypt and Syria at the beginning of the Yom Kippur War 1973

LITERATURE

- Adamsky, Dima P. “Disregarding the Bear: How US Intelligence Failed to Estimate the Soviet Intervention in the Egyptian-Israeli War of Attrition.” Journal of Strategic Studies 28, no. 5 (2005): 803–31. https://doi.org/10.1080/01402390500393977.

- Bar-Joseph, Uri. “Israel’s Military Intelligence Performance in the Second Lebanon War.” International Journal of Intelligence and CounterIntelligence 20, no. 4 (2007): 583–601. https://doi.org/10.1080/08850600701472970.

- Betts, Richard K. “Surprise despite Warning.” Political Science Quarterly 95, no. 4 (1980): 551–72.

- Claasen, Adam. “The German Invasion of Norway, 1940: The Operational Intelligence Dimension.” Journal of Strategic Studies 27, no. 1 (2004): 114–35. https://doi.org/10.1080/0140239042000232792.

- Cohen, Eliot A. “'Only Half the Battle’: American Intelligence and the Chinese Intervention in Korea, 1950.” Intelligence and National Security 5, no. 1 (1990): 129–49. https://doi.org/10.1080/02684529008432038.

- Coox, Alvin D. “Flawed Perception And Its Effect Upon Operational Thinking: The Case of the Japanese Army, 1937–41.” Intelligence and National Security 5, no. 2 (1990): 239–54. https://doi.org/10.1080/02684529008432052.

- Cox, Sebastian. “A Comparative Analysis of RAF and Luftwaffe Intelligence in the Battle of Britain, 1940.” Intelligence and National Security 5, no. 2 (1990): 425–43. https://doi.org/10.1080/02684529008432057.

- Craig, Tony. “‘You Will Be Responsible to the GOC’. Stovepiping and the Problem of Divergent Intelligence Gathering Networks in Northern Ireland, 1969–1975.” Intelligence and National Security 33, no. 2 (2018): 211–26. https://doi.org/10.1080/02684527.2017.1349036.

- Dahl, Erik J. “Why Won’t They Listen? Comparing Receptivity toward Intelligence at Pearl Harbor and Midway.” Intelligence and National Security 28, no. 1 (2013): 68–90. https://doi.org/10.1080/02684527.2012.749061.

- Elder, Gregory. “Intelligence in War: It Can Be Decisive.” Studies in Intelligence 50, no. 2 (2006): 13–26. https://www.cia.gov/library/center-for-the-study-of-intelligence/csi-publications/csi-studies/studies/vol50no2/83965Webk.pdf.

- Gentry, John A. “Intelligence Learning and Adaptation: Lessons from Counterinsurgency Wars.” Intelligence and National Security 25, no. 1 (2010): 50–75. https://doi.org/10.1080/02684521003588112.

- Gentry, John A, and Joseph S Gordon. “U.S. Strategic Warning Intelligence: Situation and Prospects.” International Journal of Intelligence and CounterIntelligence 31, no. 1 (2018): 19–53. https://doi.org/10.1080/08850607.2017.1374149.

- Kahana, Ephraim. “Early Warning versus Concept: The Case of the Yom Kippur War 1973.” Intelligence and National Security 17, no. 2 (2002): 81–104. https://doi.org/10.1080/02684520412331306500.

- Kugler, Tamar, Edgar E. Kausel, and Martin G. Kocher. “Are Groups More Rational than Individuals? A Review of Interactive Decision Making in Groups.” Wiley Interdisciplinary Reviews: Cognitive Science 3, no. 4 (July 2012): 471–82. https://doi.org/10.1002/wcs.1184.

- Lacasa-Gomar, Jaime. “The Sender-Linker-Receiver Communication Model.” Iowa State University, 1970.

- Mahnken, Thomas G. “Gazing at the Sun: The Office of Naval Intelligence and Japanese Naval Innovation, 1918–1941.” Intelligence and National Security 11, no. 3 (1996): 424–41. https://doi.org/10.1080/02684529608432370.

- Oversight and Investigations Subcomittee. “Intelligence Successes and Failures in Operations Desert Shield/Storm.” Washington, D.C., 1993.

- Saito, Naoki. “Japanese Navy’s Tactical Intelligence Collection on the Eve of the Pacific War.” International Journal of Intelligence and CounterIntelligence 33, no. 3 (2020): 556–74. https://doi.org/10.1080/08850607.2020.1743948.

Write a comment